Evaluation Patterns: Difference between revisions

No edit summary |

No edit summary |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

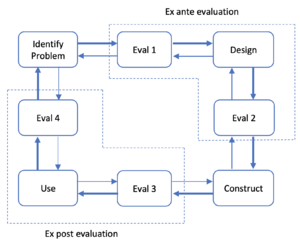

Evaluations in the Science of the Artificial - Reconsidering the Build-Evaluate Pattern in Design Science Research as described by Sonnenberg and vom Brocke <ref>Sonnenberg, C., and vom Brocke, J. 2012. “Evaluation Patterns for Design Science Research Artefacts,” in Practical Aspects of Design Science, Communications in Computer and Information Science, M. Helfert and B. Donnellan (eds.), Berlin, Heidelberg: Springer, pp. 71–83. (https://doi.org/10.1007/978-3-642-33681-2_7).</ref> <ref>Sonnenberg, C., and vom Brocke, J. 2012. “Evaluations in the Science of the Artificial – Reconsidering the Build-Evaluate Pattern in Design Science Research,” in Design Science Research in Information Systems. Advances in Theory and Practice, Lecture Notes in Computer Science, K. Peffers, M. Rothenberger, and B. Kuechler (eds.), Berlin, Heidelberg: Springer, pp. 381–397. (https://doi.org/10.1007/978-3-642-29863-9_28).</ref> .[[File:Evaluation pattern.png|300px|thumb|Source: Redrawn from Sonnenberg and vom Brocke.]] | |||

<p style="font-weight: 400;">It has been criticized that current DSR processes envision the evaluation of an artefact in a strict sequence late in the design process. The following DSR evaluation patterns attempt to account for the emergent nature of DSR artefacts and address this limitation. Ex-ante and ex-post evaluations are distinguished based on when they are carried out. Ex-ante evaluations are done before an artefact is built, and ex-post evaluations are done after an artefact is built.</p> | |||

<p style="font-weight: 400;">It has been criticized that current DSR processes envision the evaluation of an artefact | <p style="font-weight: 400;">Together, these feedback loops make up a feedback cycle that goes in the other direction than the DSR cycle. When carrying out individual evaluation activities, various evaluation methods or patterns may be utilized, depending on the context and purpose of the evaluation within the DSR process. Additionally, composite evaluation patterns could be created by combining different evaluation activities. The evaluation activities are highly integrated into this instance. The Action Design Research method, which uses principles to connect building and evaluation activities, is one example of such a composite pattern. This article does not discuss such composite patterns.</p> | ||

<p style="font-weight: 400;">Together, these feedback loops make up a feedback cycle that goes in the other direction than the DSR cycle. When carrying out individual evaluation activities, various evaluation methods or patterns may be utilized, depending on the context and purpose of the evaluation within the DSR process. Additionally, composite evaluation patterns could be created by combining | <p style="font-weight: 400;">In order to exemplify their approach, they distinguish four evaluation types (Eval 1 to Eval 4), which are derived from typical DSR activities. They are characterized by the input and output of each activity as well as specific evaluation criteria and evaluation methods.</p> | ||

<p style="font-weight: 400;">In order to exemplify their approach, they distinguish four evaluation types (Eval 1 to Eval 4), which are derived from typical DSR activities | |||

*Eval 1: Evaluating the problem identification: criteria include importance, novelty and feasibility. | *Eval 1: Evaluating the problem identification: criteria include importance, novelty and feasibility. | ||

*Eval 2: Evaluating the solution design: criteria include simplicity, clarity and consistency. | *Eval 2: Evaluating the solution design: criteria include simplicity, clarity and consistency. | ||

*Eval 3: Evaluating the solution instantiation: criteria include ease of use, | *Eval 3: Evaluating the solution instantiation: criteria include ease of use, fidelity with real-world phenomenon and robustness. | ||

*Eval 4: Evaluating the solution in use: criteria include effectiveness, efficiency and external consistency. | *Eval 4: Evaluating the solution in use: criteria include effectiveness, efficiency and external consistency. | ||

== Reference == | == Reference == | ||

Latest revision as of 18:19, 8 November 2022

Evaluations in the Science of the Artificial - Reconsidering the Build-Evaluate Pattern in Design Science Research as described by Sonnenberg and vom Brocke [1] [2] .

It has been criticized that current DSR processes envision the evaluation of an artefact in a strict sequence late in the design process. The following DSR evaluation patterns attempt to account for the emergent nature of DSR artefacts and address this limitation. Ex-ante and ex-post evaluations are distinguished based on when they are carried out. Ex-ante evaluations are done before an artefact is built, and ex-post evaluations are done after an artefact is built.

Together, these feedback loops make up a feedback cycle that goes in the other direction than the DSR cycle. When carrying out individual evaluation activities, various evaluation methods or patterns may be utilized, depending on the context and purpose of the evaluation within the DSR process. Additionally, composite evaluation patterns could be created by combining different evaluation activities. The evaluation activities are highly integrated into this instance. The Action Design Research method, which uses principles to connect building and evaluation activities, is one example of such a composite pattern. This article does not discuss such composite patterns.

In order to exemplify their approach, they distinguish four evaluation types (Eval 1 to Eval 4), which are derived from typical DSR activities. They are characterized by the input and output of each activity as well as specific evaluation criteria and evaluation methods.

- Eval 1: Evaluating the problem identification: criteria include importance, novelty and feasibility.

- Eval 2: Evaluating the solution design: criteria include simplicity, clarity and consistency.

- Eval 3: Evaluating the solution instantiation: criteria include ease of use, fidelity with real-world phenomenon and robustness.

- Eval 4: Evaluating the solution in use: criteria include effectiveness, efficiency and external consistency.

Reference

- ↑ Sonnenberg, C., and vom Brocke, J. 2012. “Evaluation Patterns for Design Science Research Artefacts,” in Practical Aspects of Design Science, Communications in Computer and Information Science, M. Helfert and B. Donnellan (eds.), Berlin, Heidelberg: Springer, pp. 71–83. (https://doi.org/10.1007/978-3-642-33681-2_7).

- ↑ Sonnenberg, C., and vom Brocke, J. 2012. “Evaluations in the Science of the Artificial – Reconsidering the Build-Evaluate Pattern in Design Science Research,” in Design Science Research in Information Systems. Advances in Theory and Practice, Lecture Notes in Computer Science, K. Peffers, M. Rothenberger, and B. Kuechler (eds.), Berlin, Heidelberg: Springer, pp. 381–397. (https://doi.org/10.1007/978-3-642-29863-9_28).